Recursive Sentiment and Tonal Cognition Framework

- Troy Lowndes

- 1 day ago

- 6 min read

Updated: 5 hours ago

Why This Architecture Works with ParasiTick

The Core Idea

Most AI language models learn tone and emotion from a fixed set of training data, then stop improving. This model doesn’t stop. It keeps teaching itself by analysing its own output, spotting where it got tone wrong, and feeding those lessons back into the next attempt. Think of it like a musician who records themselves, listens back, identifies where the feel was off, then plays again.

Over and over. Each pass gets sharper. But to do that well, you need a richer map of what tone actually is. Binary sentiment - positive, negative, neutral - is not a map. It’s a single pixel.

The SpectralBinary Framework

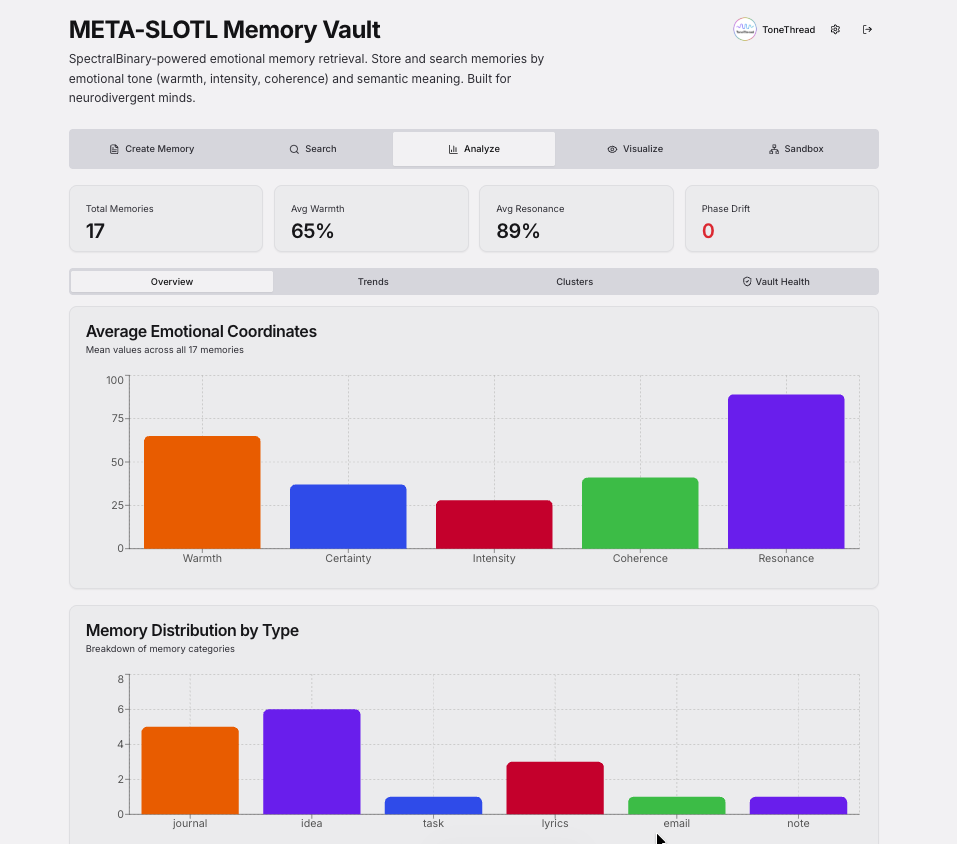

The SpectralBinary framework gives the model a full five-dimensional coordinate system to work from.

SpectralBinary measures tone across five axes, not one.

Four axes capture the quality of a signal in the moment. The fifth tracks what happens to it over time.

1. | Warmth ↔ Detachment | Relational temperature. How much a signal reaches t oward or pulls away from connection. |

2. | Certainty ↔ Ambiguity | Confidence architecture. How clearly a signal commits to a position or holds space open. |

3. | Intensity ↔ Restraint | Energetic force. How much pressure a signal carries or consciously withholds. |

4. | Coherence ↔ Conflict | Structural integrity. Whether tone, content, and intent are aligned or quietly working against each other. |

5. | Resonance | The meta-axis. Not what a signal is, but how long it echoes and how much it shapes what follows. |

Axes 1–4 answer: what is this signal? Axis 5 answers: what does it do across time? Existing frameworks measure moments. SpectralBinary measures moments and their gravity.

Two messages can score identically across the first four axes and have completely different Resonance. One lands and dissipates. The other pivots a relationship, a meeting, a project, a team.

Resonance is what the thread in ToneThread actually refers to: the tensile quality connecting tones across time.

The Loop (Step by Step)

The recursive training loop maps directly onto these five axes.

Each pass through the loop measures the model’s output against all five dimensions, not just whether it sounds emotionally appropriate.

The model generates a response to a prompt, conversation, or piece of text.

That response gets fed back in for inspection, not by the main model itself, but by a set of specialised evaluator layers. Each one looks at a different dimension of tone:

Sentiment classification: what emotional register is this sitting in?

Rhetorical tone detection: how is this being said? Persuasive, passive, assertive, deflective?

Emotional coherence mapping: does the tone hold together, or does it drift and contradict?

Resonance scoring: how much weight does this signal carry? Is it pivotal or filler? Will it persist or dissipate?

ParasiTick detection: is there something subtly wrong here? A manipulation? A distortion? A phrase that sounds supportive but actually undermines?

Quality scores come back from all evaluator layers, measuring tonal consistency, empathy alignment, narrative plausibility, and resonance weight.

The main model updates its internal understanding based on those scores, adjusting how it weighs and interprets emotional signals.

It generates again. Better this time. Then the loop repeats.

The Corpus: Real + Synthetic

Alongside the loop, the system builds a growing library of examples. Some are curated from real-world communication. Others are auto-generated to deliberately surface edge cases: sarcasm layered under politeness, cultural tone shifts, the kind of affective drift where someone starts warm and slowly turns cold without ever saying anything overtly hostile.

Recursive testing against this corpus strengthens the model’s ability to handle non-obvious tone transitions - the ones that trip up every other system - and to correctly score their Resonance.

Because some of the most dangerous communication patterns are not just tonally subtle. They arebuilt to persist.

What Emerges

Over enough iterations, something meaningful happens. The model doesn’t just learn what emotions sound like. It develops a form of meta-cognition: it learns how it learns emotion. It starts recognising its own blind spots and compensating for them.

The output stops being “AI that guesses at tone” and starts becoming AI that understands and mirrors tone responsively - including understanding which signals will echo and which will fade.

The Scale: From One Person to Everything

Here’s something worth sitting with. This framework began with a single person trying to understand their own communication patterns. That’s the origin point. One human. Five axes. A system for making sense of tone that felt true rather than approximate.

From that singular core, SpectralBinary scales without losing fidelity.

Individual: One person understanding and translating their own tone. The origin of the whole framework.

Team: Tone monitoring and resonance tracking across small group communication.

Business unit: Federated models calibrated to each division’s own baseline and risk profile.

Enterprise: Aggregated pattern intelligence across all units. Anonymised, systemic, early warning at scale.

Civilisation: The same five axes applied to institutional communication, public discourse, and collective meaning-making.

Same engine. Same axes. Same thread. The only thing that changes is the scale of the signal. That fractal property isn’t incidental. It’s what makes the framework genuinely foundational rather than a clever tool for a specific use case.

META-SLOTL - A New Lens on Recursive Model Training

A Multilayered Encoding and Tone-Adaptive Spectral-Layered Optimisation Task-Loop for High-Fidelity Recursive LLM Training.

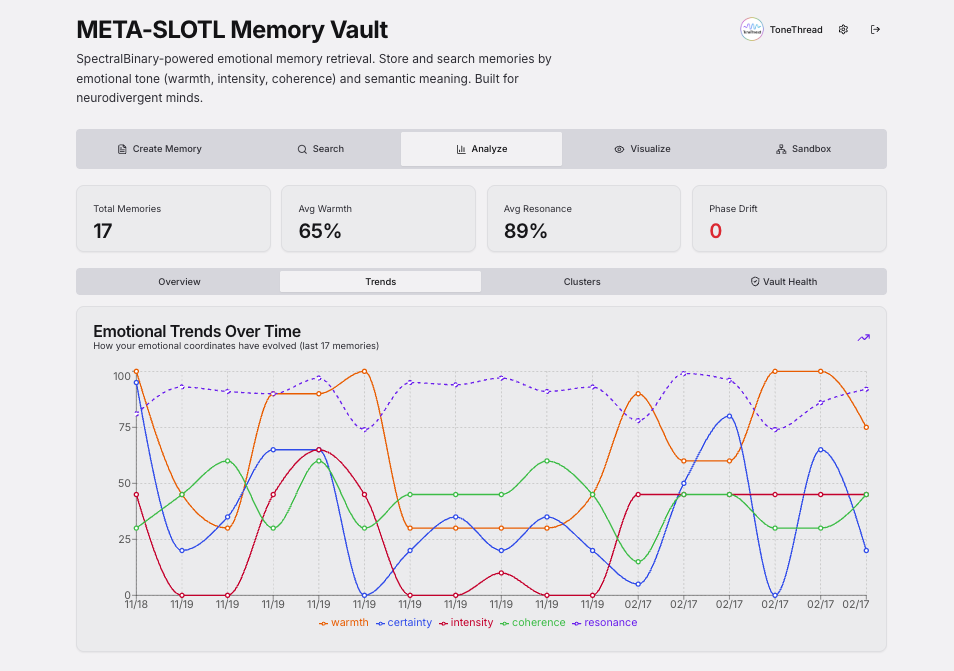

Memories are not stored chronologically. They are stored by their SpectralBinary coordinates: warmth, certainty, intensity, coherence, and, critically, Resonance weight.

High-Resonance signals are preserved with full fidelity. Low-Resonance filler is compressed or discarded. The Vault remembers what mattered and forgets what didn’t - which is what human memory does, and what most AI memory systems fundamentally fail to replicate.

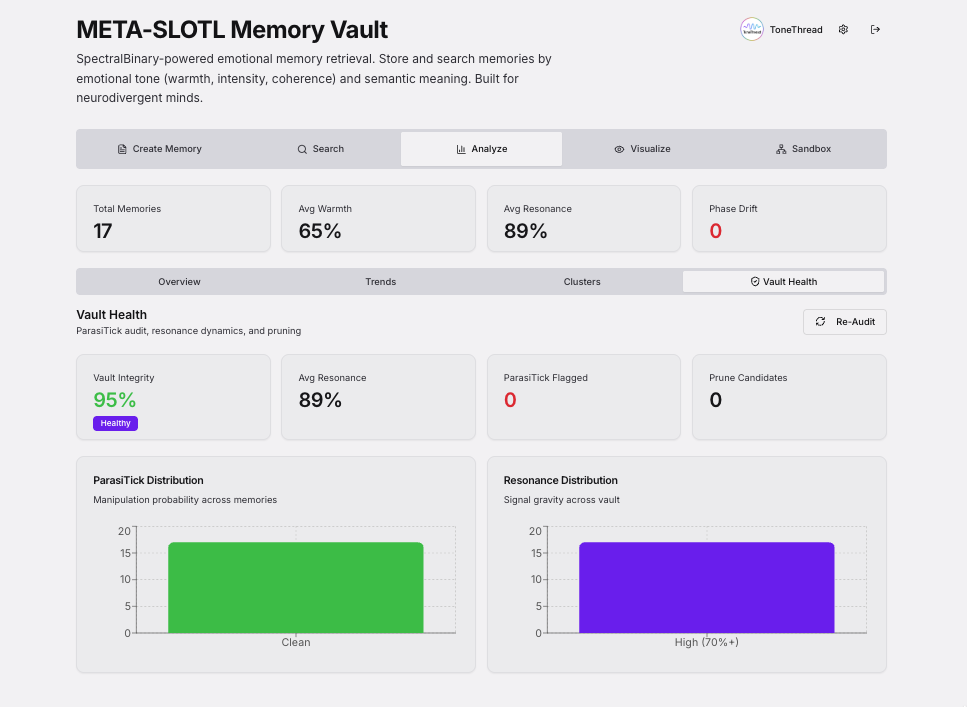

An always-on audit log monitors for anomalies in real time. Embedded directly in the evaluation loop, the Vault acts as a silent sentinel. It flags parasitic patterns: the microscopic distortions, the tonal sleights-of-hand, the seemingly flawless phrases that sail through every other quality gate yet smuggle hidden intent.

Without this layer, recursive self-improvement doesn’t elevate the model. It hones it into a superior manipulator.

With META-SLOTL, the model learns to distinguish emotional resonance from emotional exploitation.

In enterprise terms: any organisation deploying emotionally intelligent AI without a dedicated manipulation detection layer isn’t building a tool. It’s building a weapon that will eventually turn on its own people.

ParasiTick is the difference between an AI that understands you, and an AI you can trust.

Why ParasiTick Is the Key Piece

Every other evaluator in this loop is measuring quality. ParasiTick is measuring integrity.

Without ParasiTick, you have a system that gets better at producing emotionally fluent responses. That sounds good until you realise: emotionally fluent includes emotionally manipulative. A model

trained purely on sentiment, tone, and coherence will get excellent at saying things that feel right - including things that are subtly coercive, gaslighting, love-bombing, or strategically vague.

High Resonance makes this worse, not better. A manipulative signal with high Resonance is one that persists, shapes subsequent interactions, and builds influence over time. The model needs to detect it early, flag it, and refuse to optimise for it.

ParasiTick as a first-class evaluator means integrity isn’t bolted on after the fact. It’s baked into every training cycle from the start.

Why This Architecture Works

The recursive loop means the model never plateaus. Every output becomes training data for the next iteration.

Five independent axes mean no single metric dominates. Warmth, certainty, intensity, coherence, and resonance each get independent assessment.

The hybrid corpus keeps surfacing new edge cases, so the model doesn’t just optimise for common patterns and miss the subtle ones.

Resonance scoring means the model learns to weight its own outputs by temporal importance, not just immediate quality. It learns what will matter tomorrow, not just what sounds right today.

Meta-cognition emerges naturally from the recursion. The model doesn’t just improve, it learns how it improves, which means it can generalise across domains and scale.

ParasiTick as a first-class evaluator means integrity isn’t bolted on after the fact. It’s baked into every training cycle from the start.

Fractal scale means the same architecture that works for a single person’s communication health works for a team, a company, and beyond. The engine doesn’t change. The aperture does.

Final Word

At the beginning of this thread I thought I’d just eaten too much Cadbury Dream and from that my mind had started to drift.

Turns out I’d stress-tested an AI parasite instead. Hindsight reframes guilt as recursion.

We now have a functioning prototype.

It tasted like fear, but analysed like insight.

- Troy

East Freo, WA

Dude with Crazy Ideas | Accidental Host to Trojan

Comments